Security operations teams depend on telemetry from endpoints, identity systems, cloud platforms, networks, and applications. Over the last few years, the volume, diversity, and cost of that telemetry have grown faster than most SIEM architectures were designed to handle. Licensing models tied to ingestion volume strain budgets. Log formats change frequently. Tool sprawl creates inconsistent schemas and formats. At the same time, compliance expectations require complete and reliable audit trails.

In response, organizations introduced a dedicated data layer between sources and analytics platforms. That layer has evolved into what analysts now call a Security Data Pipeline Platform, or SDPP.

Why the modern SOC needs a data control layer

Traditional SOC architectures assumed that logs could be forwarded directly into a SIEM, indexed, and correlated. That approach becomes fragile at scale. When ingestion costs rise, teams either reduce logging and risk blind spots, shorten retention and limit investigations, or absorb unsustainable spend. When schemas drift silently, detections break. When a log source stops sending data, the SOC may not notice until an investigation fails.

On the one hand, security teams are increasingly concerned about noisy telemetry. On the other hand, about missing telemetry. A single silent failure can invalidate detection coverage assumptions. As environments become hybrid and multi-cloud, telemetry reliability and consistency become architectural problems.

At the same time, SIEM platforms are evolving toward more modular models that separate storage from analytics. Data may live in object storage or lakehouse systems, while the SIEM focuses on correlation and real-time alerting. This shift requires an intermediary layer that governs how data is prepared and routed across multiple destinations.

That intermediary layer is the Security Data Pipeline Platform.

What is a security data pipeline platform?

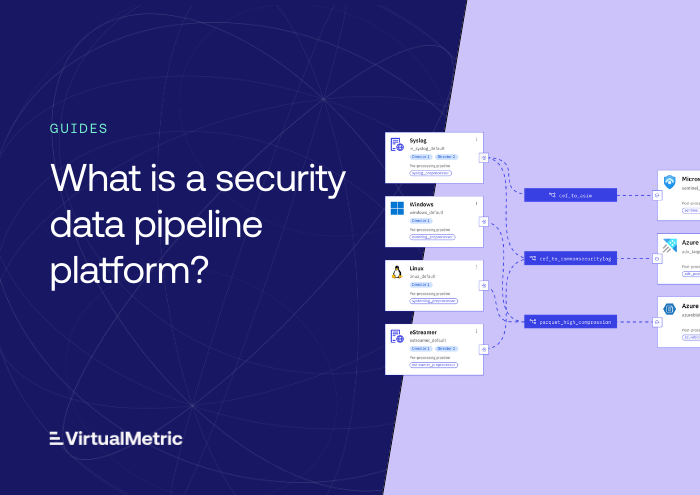

A Security Data Pipeline Platform is a purpose-built control layer that ingests, normalizes, enriches, filters, routes, and monitors security telemetry before it reaches downstream systems such as SIEM, XDR, SOAR, or security data lakes.

It sits between telemetry sources and analytics platforms. Its primary function is to govern the flow, quality, cost, and reliability of security data across the SOC stack.

Unlike generic ETL tools or observability pipelines, an SDPP is designed specifically for security telemetry. It understands detection requirements, schema standards such as OCSF, ECS, ASIM, and UDM, and the operational realities of SIEM economics and compliance reporting.

Where an SDPP sits in the architecture

In a modern SOC architecture, security telemetry generated by sources such as EDR, firewalls, identity providers, cloud audit logs, and applications passes through the Security Data Pipeline Platform before reaching downstream analytics systems.

SDPP architectures differ in deployment models. Some platforms centralize telemetry processing in vendor-managed infrastructure. Others execute ingestion, normalization, reduction, and routing within the customer’s own environment. In this model, telemetry remains under the organization’s operational control and is forwarded only to explicitly defined destinations. This approach supports strict data residency requirements, simplifies compliance alignment, and reduces dependency on external processing layers.

As telemetry moves through the pipeline layer, it is processed in transit and forwarded to one or more destinations. These may include SIEM platforms for real-time detection, data lakes for long-term retention, XDR systems, analytics engines, or storage platforms.

By decoupling sources from destinations, the SDPP allows organizations to route data to multiple systems simultaneously, migrate between SIEM platforms with lower risk, and align storage tiers with data value. The SIEM becomes a curated analytics layer fed with high-fidelity, normalized events rather than a monolithic ingestion endpoint.

Core capabilities of a security data pipeline platform

An SDPP is more than a log forwarder. It provides structured governance over several critical dimensions of security telemetry.

1. Telemetry ingestion and integration

A Security Data Pipeline Platform receives telemetry from diverse sources across the environment, including endpoint agents, network devices, identity systems, cloud-native logs, APIs, and syslog streams. Rather than replacing source-level logging mechanisms, it integrates with them, acting as the processing layer through which telemetry flows before reaching downstream systems. The platform supports structured and unstructured formats, handles high-throughput ingestion reliably, and provides a consistent integration point for onboarding new data sources without requiring changes across every analytics destination.

2. Intelligent data reduction

Security data reduction is not blind filtering. Effective platforms apply context-aware suppression, field-level trimming, adaptive sampling, and summarization techniques that reduce ingestion volume while preserving detection-relevant fields. The objective is to cut costs without degrading investigative value. Analysts consistently cite ingestion cost pressure as a primary driver for pipeline adoption.

3. Normalization and schema discipline

Detection engineering depends on consistent field naming and structure. An SDPP parses raw logs, maps them into common schemas, and monitors for schema drift. When vendors change log formats, the pipeline surfaces those changes early so detection logic does not silently fail. Practitioners repeatedly identify normalization as foundational to mature detection programs.

4. Contextual enrichment

Raw logs often lack the context required for prioritization and investigation. Pipelines enrich events with asset data, identity attributes, threat intelligence, geolocation, and business context before forwarding them downstream. Enrichment in transit reduces triage time and improves correlation quality across tools.

5. Intelligent routing and storage tiering

Not all telemetry has equal value. An SDPP routes high-signal events to hot storage for immediate detection, while directing lower-priority or compliance-focused logs to cost-efficient object storage. Modern SIEM strategies increasingly rely on decoupled storage and analytics. The pipeline becomes the policy engine that decides where each event should reside.

6. Telemetry integrity and health monitoring

Noise is manageable; silence is dangerous. Advanced SDPPs continuously monitor ingestion baselines, detect silent source failures, identify abnormal volume deviations, and track destination health. They provide visibility into whether telemetry coverage assumptions are actually valid. Analysts highlight this as a growing requirement as SOCs mature.

7. Policy-driven governance

Security data handling increasingly intersects with compliance, privacy, and audit requirements. Pipelines enforce masking and redaction policies, maintain transformation lineage, and provide centralized governance over how telemetry is shaped and routed. This consolidates data policy enforcement in one architectural layer rather than scattering it across tools.

Together, these capabilities elevate the pipeline from a cost-optimization utility to a formal control layer in the SOC.

Why the SDPP is becoming the SOC control plane

Industry research increasingly describes security data pipelines as the control plane of modern security operations. The reason is straightforward: every downstream detection, analytics, and AI system depends on the quality and consistency of telemetry it receives.

When ingestion, normalization, routing, and data health are centralized, security teams gain deterministic control over their telemetry lifecycle. They can express transformation logic once and apply it consistently across destinations. They can migrate platforms without rewriting every source integration. They can quantify cost reduction and storage allocation policies. They can verify that critical log sources are active and complete.

Control over telemetry directly influences detection accuracy, investigation speed, compliance reporting, and overall SOC economics.

SDPP vs. Other categories

Because the category is still maturing, it is often confused with adjacent technologies.

SDPP vs. SIEM

A SIEM ingests, stores, correlates, and alerts on security events. An SDPP preprocesses and governs telemetry before it reaches the SIEM. The two are complementary. Modern architectures increasingly rely on pipelines to keep SIEM ingestion sustainable and detection logic stable.

SDPP vs. telemetry pipeline

Telemetry pipelines in observability handle logs, metrics, and traces for operational monitoring. A Security Data Pipeline Platform is focused specifically on security telemetry and detection requirements. It incorporates schema normalization, threat enrichment, compliance controls, and SIEM economics that generic observability pipelines do not prioritize.

SDPP vs. business ETL

Business ETL systems transform structured data for reporting and analytics, often in batch processes. SDPPs operate in near real time, process semi-structured and unstructured logs, and apply security-specific logic such as schema mapping to OCSF or ASIM, in-stream enrichment, and policy-based routing.

SDPP vs. security data fabric

A security data fabric describes a broader architectural vision that unifies ingestion, storage, governance, and analytics. An SDPP is a concrete platform category focused on the telemetry transformation and routing layer within that architecture.

Clear differentiation matters for architectural planning and vendor evaluation.

The business and operational value of a security data pipeline platform

Security Data Pipeline Platforms are often introduced to reduce SIEM ingestion costs, but their impact extends well beyond licensing economics.

Intelligent reduction lowers ingestion volume while preserving high-value, detection-relevant fields. Instead of forwarding every raw event, the pipeline applies context-aware suppression, field trimming, summarization, and conditional logic to retain investigative fidelity while eliminating noise. This directly reduces storage and indexing costs.

Routing policies then align data with appropriate storage tiers. Detection-critical telemetry can remain in high-performance indexed environments, while compliance or lower-priority logs are routed to cost-efficient object storage. As SIEM architectures evolve toward separating storage from analytics, this routing discipline becomes central to sustainable cost control.

Normalization performed once in the pipeline eliminates redundant parsing and transformation logic across multiple tools. Detection engineers no longer need to maintain separate field mappings or custom scripts in each downstream platform. This reduces manual engineering overhead and minimizes the risk of broken detections when schemas change.

Migration support adds further economic flexibility. By decoupling sources from destinations, teams can test and compare SIEM platforms in parallel without reconfiguring every data source. Telemetry portability becomes an architectural capability rather than a risky, one-time project.

Beyond cost and efficiency, SDPPs improve detection reliability. Consistent schema discipline and telemetry health monitoring reduce blind spots caused by silent source failures or unnoticed format changes. Centralized visibility into ingestion completeness strengthens confidence in coverage metrics and audit claims.

Operationally, centralizing reduction, normalization, enrichment, and routing policies simplifies the security data lifecycle. Instead of scattering transformation logic across SIEM, XDR, and data lake platforms, teams govern telemetry behavior in one layer. This reduces configuration drift, accelerates onboarding of new log sources, and shortens the time required to operationalize detections.

Cost optimization, therefore, is not simply a matter of filtering rules. It is the outcome of architectural control over how telemetry is shaped, stored, and governed across the SOC stack.

The role of AI and automation in SDPPs

Practitioners express a strong interest in using AI within pipelines for engineering-heavy tasks such as parser generation, schema drift detection, and anomaly baselining. At the same time, most teams remain cautious about fully autonomous decision-making in security operations.

Within an SDPP, AI is typically applied as assistive automation. It can recommend reduction logic, generate parsing rules from sample logs, identify unusual volume patterns, or suggest routing adjustments. The core governance model remains policy-driven and transparent. Automation augments engineering capacity rather than replacing human oversight.

As AI-driven detection and analytics systems become more prevalent, the quality of upstream telemetry becomes even more critical. Pipelines provide the structured, normalized, and enriched data foundation required for those systems to operate reliably.

Example: VirtualMetric DataStream as an SDPP

VirtualMetric DataStream is an example of a Security Data Pipeline Platform that operates between telemetry sources and analytics platforms, performing all ingestion and processing within the customer’s environment to maintain full control over data residency and compliance boundaries. It provides deterministic reduction, schema normalization into widely used security formats, enrichment, and intelligent routing across SIEM and storage targets.

DataStream includes telemetry health monitoring capabilities that surface silent source failures and schema changes, as well as write-ahead logging mechanisms to ensure durability and zero data loss during processing. By centralizing transformation and routing logic, it allows security teams to maintain control over cost, quality, and data lifecycle across hybrid environments.

Final thoughts

Security Data Pipeline Platforms have emerged as a foundational layer in modern SOC architectures. They address structural pressures created by data growth, cost models, schema fragmentation, and compliance requirements. By governing ingestion, normalization, enrichment, routing, and telemetry integrity, they shape how every downstream system performs.

As SIEM platforms evolve toward modular, query-oriented architectures and AI systems demand high-quality input data, the importance of this control layer continues to increase. Organizations evaluating their security data strategy should view the SDPP not as an optional optimization tool, but as an architectural component that defines the reliability, economics, and agility of the SOC stack.

For organizations routing security telemetry to SIEM, VirtualMetric DataStream provides full pipeline functionality during the free trial with up to 500 GB/day of ingest, helping you get maximum insight from your data while keeping costs under control.

analysts say

-

“Security data pipelines are becoming foundational to modern SOC architectures.”

Software Analyst Cyber Research (SACR)

-

“If You’re Not Using Data Pipeline Management For Security And IT, You Need To.”

Forrester

See VirtualMetric DataStream in action

Start your free trial to experience safer, smarter data routing with full visibility and control.